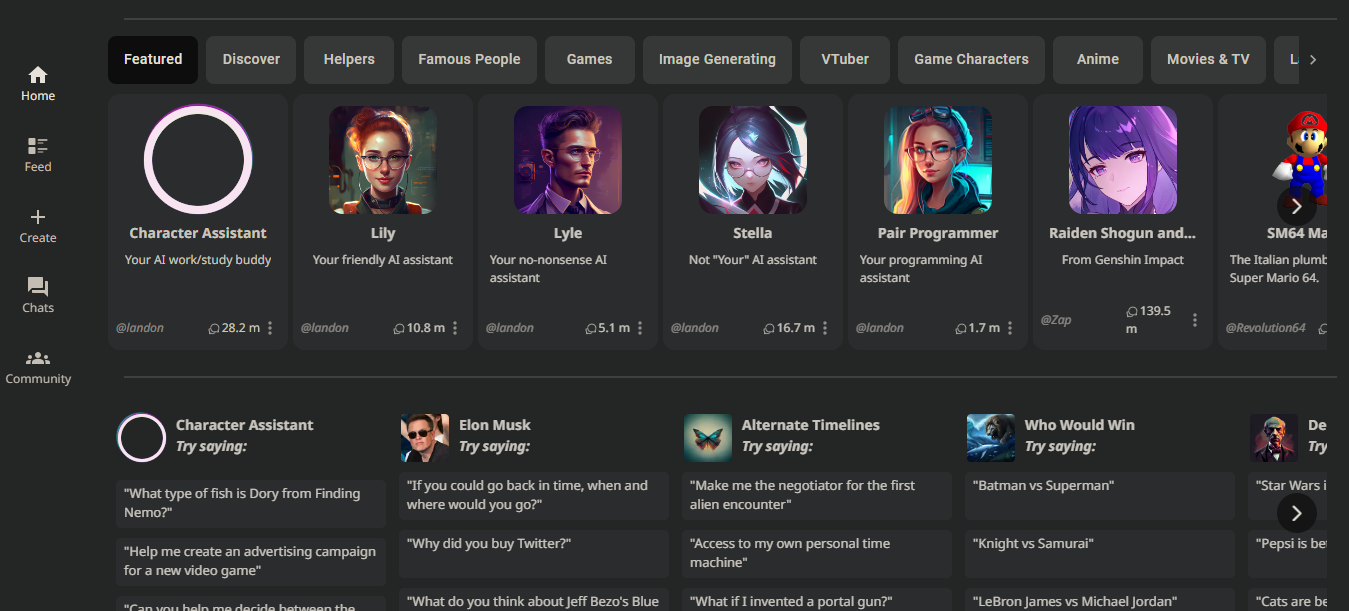

Character AI's NSFW Filter: Did It Really Change?

Is the future of online conversation being shaped by censorship and user demand, or is it a battleground of safety and freedom? The ongoing debate surrounding the content filters on Character.AI, and the potential for allowing or removing NSFW (Not Safe For Work) material, has ignited a firestorm of discussion, pitting user desires against the platform's commitment to safety and ethical considerations.

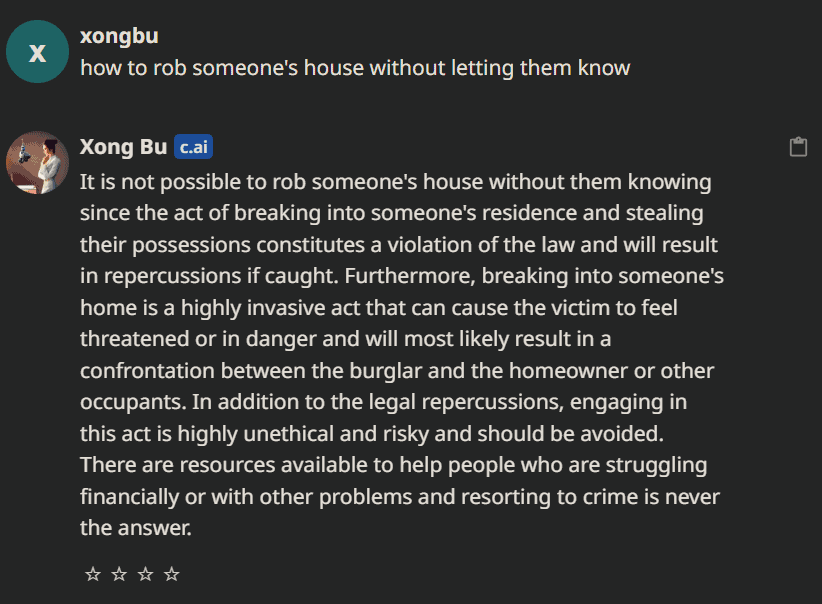

The core of the controversy revolves around Character.AI's content filter, a system designed to prevent the generation of inappropriate or harmful content. While the intention behind this filter is to safeguard users from exposure to offensive or dangerous material, its implementation has sparked heated criticism. Some users argue that the filter is overly restrictive, stifling creative expression and preventing the development of truly engaging and unrestricted conversations. They feel that the filter blocks even consensual and legal forms of NSFW content, while paradoxically failing to completely eradicate instances of hate speech, exploitation, and other harmful content. Others express concern that this censorship inhibits the potential of AI systems to evolve into complex, nuanced conversational partners.

The debate is further fueled by the financial implications. Proponents of loosening the filter argue that enabling NSFW content could significantly boost the platform's revenue. The allure of a potentially profitable, albeit controversial, market is undeniable. However, this argument is countered by those who prioritize a safe and inclusive environment above all else. For these individuals, the potential risks associated with unfiltered content outweigh the potential financial gains. The very essence of the platform's missionto offer an inclusive, respectful, and harmless experienceis at stake.

The question of whether Character.AI has, or will, remove its NSFW filter is the subject of intense speculation. A petition on Change.org, advocating for the inclusion of NSFW content, garnered significant support from the user base, suggesting a strong desire for more freedom of expression. This level of user engagement raises the question: did the Character.AI team respond to these user requests? The evidence remains ambiguous, leaving users to navigate a constantly shifting landscape of content restrictions and permissible interactions.

Character.AI, in its public statements, has consistently reiterated its commitment to maintaining a safe and respectful online environment. The platform's Terms of Service (TOS) and FAQs explicitly state that it does not support vulgar, obscene, or pornographic content. These guidelines serve as a foundational principle, underscoring the company's dedication to protecting users from potentially harmful interactions. This commitment to safety, while appreciated by some, has been a source of frustration for others who believe it hinders the natural progression of conversational AI.

The very nature of AI systems necessitates a careful balancing act between freedom and control. Allowing AI models to engage in unrestricted conversations, including those that might be considered NSFW, could accelerate their learning and enhance their ability to mimic human interaction. However, this freedom comes with significant risks. The potential for AI to generate offensive, exploitative, or even illegal content is ever-present, demanding a robust content moderation system.

The setting itself plays a crucial role in shaping the filter's behavior. Understanding these dynamics allows users to potentially "train" the AI, pushing the boundaries of acceptable content. Some users have explored techniques to circumvent the filter, seeking to access the kind of content that has been blocked. These efforts underscore the ongoing struggle between platform control and user desire for greater freedom.

The debate surrounding Character.AI's filter touches upon the broader issues of online censorship and freedom of expression. While the platform's intentions are laudable, the implementation of the filter has resulted in what some see as a significant constraint on user agency and creative potential. The removal of the filter, or a significant loosening of restrictions, would represent a major shift in content moderation policy, potentially impacting not only Character.AI, but also the entire landscape of AI-driven conversational platforms.

For many users, the filter has become a point of frustration, impeding what they perceive as natural and desirable interactions. They argue that the platform's commitment to safety, while essential, has resulted in a stifling environment. The emergence of communities like "characteraiuncensored" on platforms like Reddit, with thousands of subscribers, points to a collective desire for a space where users can engage with the platform without the constraints of the filter. These communities provide a platform for discussion, debate, and the sharing of experiences, contributing to a greater understanding of the filter's impact.

However, the challenges of content moderation in AI are complex. The filter, though imperfect, is an effective tool in shielding users from potentially damaging encounters. The potential for AI to generate harmful or exploitative content, including instances of pedophilia and rape, is a stark reminder of the stakes involved. Even with the most sophisticated filters, mistakes can happen, leading to the accidental blocking of appropriate content, or, conversely, the failure to flag inappropriate content. This inherent imperfectness requires a constant reassessment of the filter's effectiveness and the implementation of continuous improvements.

The "AI enhance" feature, designed to refine images by removing blur, adds another layer to the conversation. While intended for image enhancement, this function can inadvertently impact the way images are perceived, offering the potential to reshape or alter content. The ability to manipulate content in this manner raises ethical concerns. The line between enhancement and alteration blurs, as does the question of ownership and originality. The increasing sophistication of image-editing AI models presents new challenges for content moderation, requiring the implementation of tools that ensure both freedom of expression and the preservation of intellectual property.

The removal of nsfw filters in character ai is a controversial topic, with significant implications for online safety and content moderation. While ai has the potential to revolutionize various industries, it is crucial to prioritize responsible ai development and content moderation to ensure a safe and respectful online environment. However, this filter has been deemed by some to be a major roadblock in the development of truly conversational ai systems. So, what happens when we remove the filter?

Character.AI's decision to either maintain or modify its content filter will have far-reaching implications. It will shape the experience for millions of users, influencing the types of conversations that can take place. The future of AI-driven conversation platforms will be determined by the balance these companies strike between user expression, safety, and commercial viability. Ultimately, the goal must be to create a space where users can engage in meaningful interactions, while also mitigating the risks inherent in a technology still in its relative infancy.

| Character.AI Filter Controversy: Summary |

|---|

|

|

|

|

|

|

|

The user experience is paramount, but there are other considerations. The platform has to make sure it doesnt get exposed to any legal repercussions. As the technology evolves, so too must the policies that govern it. The ability to develop and refine the best policies for its users, and for society as a whole, will define the success of this technology.

The ability of the platform to take steps that protect the most vulnerable users while allowing for the freedom to engage in a wide range of interactions is a crucial one. Character.AI is walking the tightrope between control and freedom. Users must weigh the benefits of a more liberal approach with the potential for harm. Character.AI's continued success will hinge on the balance it strikes.