Fixing Garbled Characters: Guide & Solutions For Unicode Issues

Have you ever encountered a digital text that looks like a jumbled mess of symbols and characters instead of the words you expect? This frustrating phenomenon, often called "mojibake," can transform perfectly readable text into an incomprehensible sequence of seemingly random characters, hindering communication and causing significant data integrity issues.

The issue typically arises when text data, intended to be displayed using a specific character encoding, is interpreted using a different encoding. This mismatch leads to the incorrect mapping of character codes to glyphs, resulting in the display of unexpected characters. Common examples of this include seeing sequences like "\u00e3\u00ab," "\u00e3," "\u00e3\u00ac," "\u00e3\u00b9," or simply "\u00e3" in place of the intended characters. The problem isn't limited to a few specific characters; the entire text can become corrupted, rendering the information useless. This can happen for a variety of reasons, from incorrect settings during data storage to mismatches in web page headers or database configurations. The underlying cause always comes down to a discrepancy between how the text was encoded (saved) and how it is being decoded (displayed).

To better understand the intricacies of text encoding and mojibake, let's delve into a simplified exploration of the processes and potential solutions. Consider a basic scenario where text needs to be correctly stored, retrieved, and displayed.

A text encoding system is a method of representing characters as a sequence of bits. Different encodings use different tables to map characters to their binary representations. These encoding systems are critical for digital communication because they bridge the gap between human-readable text and the binary format computers use internally.

For instance, the well-known ASCII (American Standard Code for Information Interchange) encoding, a foundational system, assigns a 7-bit code to each character. ASCII, while simple and widely supported, is restricted because it can only represent 128 characters. This limitation means it can't accommodate a wide variety of characters used in many languages, including the accented characters and special symbols. The demand for encoding systems that could handle a wider range of characters in numerous languages led to the creation of more advanced encodings, most notably Unicode and its various implementations, like UTF-8.

Unicode is a character encoding standard that provides a unique number (code point) for every character, irrespective of the platform, program, or language. It encompasses almost all characters found in the worlds writing systems. UTF-8 is one of the most widespread encoding for Unicode. UTF-8 is flexible, as it can represent all Unicode characters while also being backwards compatible with ASCII. This means UTF-8 encoded text can safely include standard ASCII characters without issues. The benefit is obvious: a document can include text in several languages without needing to change the encoding.

The core issue is that if data is encoded with UTF-8, but the system attempts to read it with a different encoding, it would result in a translation problem. For example, a character like "" (latin small letter e with acute) has a specific code point in Unicode, and when encoded using UTF-8, it is represented as a sequence of bytes. However, if the program tries to interpret these bytes using a different encoding, like Windows-1252, which is a single-byte encoding, the interpretation will be wrong, and instead of "", it might show "\u00e3" or similar strings. This is a mojibake in action.

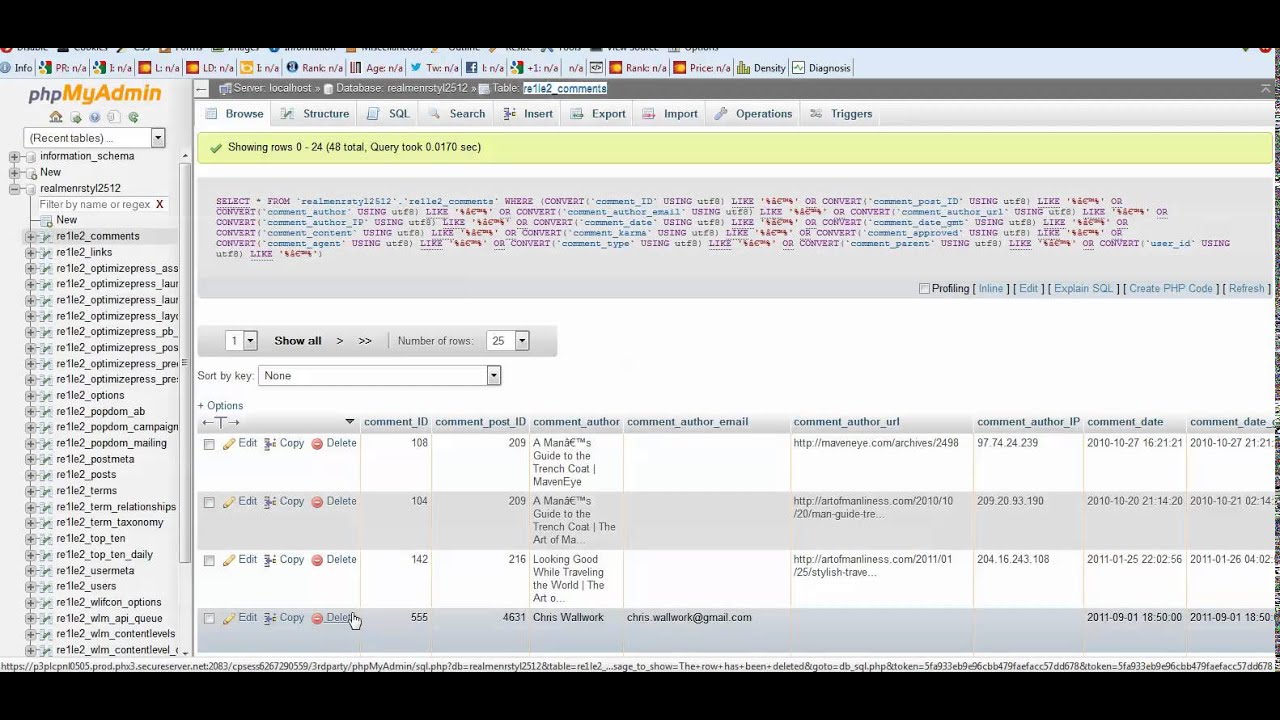

The source of the problem can often be traced to a mismatch in configurations. When working with web pages, the `` tag in the HTML header specifies the character encoding. If the declared encoding doesn't match the actual encoding of the document, mojibake occurs. Similarly, when storing data in databases like MySQL or SQL Server, the database and the table must be configured to use the correct character set, such as UTF-8, to correctly store and retrieve the characters.

Let's consider the practical steps to address mojibake. One of the first and often most effective approaches involves identifying the original encoding of the garbled text. This can sometimes be inferred by examining the patterns of the garbled characters. For example, if you see a consistent appearance of specific sequences, you might be able to guess the incorrect encoding that was used. Online tools and libraries can help to decode text, allowing you to experiment with various encodings to find one that correctly transforms the garbled text into the original text.

In many cases, the solution requires updating the configuration of your system components. For web pages, this means ensuring the `` tag correctly declares the character encoding, like UTF-8. For databases, this entails setting the correct character set and collation for the database and tables. Collation refers to the set of rules that the database uses to sort and compare text. In SQL Server, for example, the collation should be set to a UTF-8 compatible option, like `SQL_Latin1_General_CP1_CI_AS` to facilitate correct character handling.

When dealing with existing corrupted data, you may need to perform data conversion. This can involve reading the data, decoding it using the incorrect encoding, and then re-encoding it using the correct encoding, such as UTF-8. Many programming languages offer built-in functions or libraries for this purpose. For example, in Python, libraries like `chardet` can be used to detect the original encoding and then convert the text to UTF-8.

Dealing with a severely garbled database can be particularly challenging. In cases where multiple encodings have been applied in error, such as an "octuple mojibake" where a single character has been transformed multiple times through incorrect encoding and decoding, a more strategic approach may be needed. It might involve using a combination of decoding, pattern analysis, and SQL queries to systematically correct the character data.

Here are some examples of SQL queries that you may find useful for correcting common mojibake issues. The specifics will vary depending on your database system (MySQL, SQL Server, etc.)

For MySQL, you might use queries like these to correct characters:

UPDATE your_tableSET your_column = CONVERT(CONVERT(your_column USING latin1) USING utf8)WHERE your_column LIKE '%ã%'; -- Identify rows with mojibake

In SQL Server, where the collation is important, you might use queries such as:

--Example to correct some issues with incorrect character representationsUPDATE your_tableSET your_column = CONVERT(VARCHAR(MAX), your_column COLLATE Latin1_General_100_CI_AS)WHERE your_column LIKE '%ã%';

It's also important to examine the context of the garbled characters. For example, if you're seeing sequences like "\u00c2\u20ac\u00a2" or "\u00e2\u20ac," you might be dealing with the representation of characters such as the Euro symbol "" or a hyphen. Understanding the intended characters helps determine the correct fixes.

Excel and similar spreadsheet programs provide tools such as "Find and Replace" that are useful for mass character replacements. If you know that "\u00e2\u20ac\u201c" should be a hyphen, then you can use Excel's Find and Replace to correct the data in your spreadsheet. Be sure to save your data with UTF-8 encoding when exporting from the spreadsheet to ensure the corrected characters are correctly stored.

For data stored on a data server and received via an API, ensure that the API and the file-saving process correctly handle and encode the data using UTF-8. Incorrect handling at the API level or during file storage can lead to persistent mojibake issues. Verify that the headers returned by the API include `Content-Type: application/json; charset=utf-8` for JSON data and that the file saving process uses UTF-8 encoding. This will reduce the likelihood of encoding errors.

In conclusion, resolving mojibake requires a careful approach, starting with understanding the principles of character encoding and diagnosing the root cause of the problem. By identifying the incorrect encoding, reconfiguring your systems appropriately, and employing data conversion techniques, you can successfully restore your data's readability and integrity. It is crucial to adopt best practices for encoding in web development, databases, and file handling to prevent this issue.

| Character Encoding | A system that assigns a unique numerical value (code point) to each character. |

| ASCII | A 7-bit character encoding capable of representing 128 characters. |

| Unicode | A comprehensive character encoding standard which provides a unique code point for every character. |

| UTF-8 | A variable-width character encoding that uses 8-bit code units. It is widely used for encoding Unicode characters. |

| Mojibake | Garbled text that appears when data is decoded using an incorrect character encoding. |

| Collation | A set of rules that the database uses to sort and compare text. |